How would you design a Distributed Cache for a High-Traffic System?

How to design a distributed cache that serves millions of requests per second — and explain it like a pro in your next backend interview.

Coding Interview coming up? Join ByteByteGo now for a structured preparation. They are also offering a rare 50% discount now on their lifetime plan

Hello guys, Modern high-traffic systems don’t fail because of business logic — they fail because of scale.

When millions of users hit your APIs simultaneously, your database becomes the bottleneck long before your application code does. That’s why distributed caching is no longer an optimization — it’s a necessity.

But designing a distributed cache for a high-traffic system isn’t just about plugging in Redis and calling it a day. You need to think about cache invalidation, eviction policies, replication, consistency trade-offs, hot keys, failover strategies, and how your cache behaves under sudden traffic spikes. Get it wrong, and your cache becomes the problem instead of the solution.

In this guest post, Solomon Eseme of Backend Weekly breaks down how to design a distributed cache that can handle real-world scale — the kind used in systems serving millions of requests per second. From architectural choices to scaling strategies and failure handling, this guide walks through the key decisions every engineer should understand.

Today’s issue is brought to you by Masteringbackend → An all-in-one platform that helps backend engineers become highly-paid backend and AI engineers by leveraging a practical-based learning approach.

If you’re preparing for system design interviews or building large-scale systems in production, this deep dive will sharpen your thinking and give you practical insights you can apply immediately.

Let’s get into it.

The Interview Scenario

You’re in a backend interview.

They ask:

“How would you design a distributed cache for a high-traffic system?”

Here’s how to approach it:

Before we dive in, we are building the next Interview Prep Playground targeting backend engineers.

Join our MB Interview waitlist: https://tally.so/r/w46glb

Now, let’s start by clarifying why caching exists in the first place.

Understand the problem

Solving any problem is very simple, depending on your understanding of the problem.

If you want to boost the performance of your backend systems. Then you need Caching.

Caching is the process of storing frequently accessed data temporarily in high-speed storage. This storage is called a cache.

Caching helps speed up the retrieval of frequent data since the data is not accessed from the database directly.

While the goal for this is to:

Reduce DB load.

Improve latency for frequently accessed data.

Handle millions of requests with low overhead.

How do you design a distributed cache for a high-traffic system where we have multiple services running currently in a microservice architecture?

The High-Level Architecture

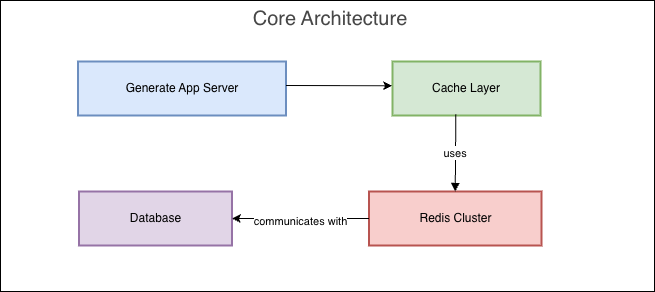

Let’s look at the high-level architecture for this:

This is the core of our architecture:

Generate App Server: This is backend systems like Express server or Go server. All requests are handled here. These servers can be distributed into different regions and can be built with any server-side language.

Cache Layer: The cache layer is any of the variants we discussed below. It can either be a Local or a Centralized Cache.

Redis Cluster: This assumes we pick Redis as our cache service; we can spin up as many caching clusters to address our high-speed and performance needs.

Database: The centralized database for our application.

Here are the 3 variants you can choose to build with:

Local Cache (In-memory): Fastest but inconsistent across nodes. Example: using

node-cacheorlru-cache.Centralized Cache (e.g., Redis, Memcached): Shared across instances, consistent view. Needs replication and scaling.

Hybrid Cache: Local cache for ultra-fast reads. Centralized cache for synchronization.

Caching Strategies

Next, let’s explore some of the caching strategies. Here are three classic strategies:

Cache-Aside (Lazy Loading): The strategy allows the app to check the cache first, the if it misses, it fetches data from the database and writes it to cache for subsequent reads. It’s a simpler method that avoids stale writes with a lower cold start latency.

Write-Through: This strategy writes to the cache and the DB together. In this strategy, data is always fresh, and the write latency increases.

Write-Behind: This strategy writes to the cache first and then writes to the DB asynchronously. Some of the features are Faster writes and a risk of data loss if the cache fails.

You can choose the Cache-Aside strategy because it’s simple, scalable, and mostly common in production-ready backend systems.

Eviction and Consistency Policy

While the speed and performance benefits are endless, at some point, you must evict some data when there are changes. That’s where deciding the best eviction strategy comes in:

Here are some of the common eviction policies:

LRU (Least Recently Used) — evict the least-used data.

LFU (Least Frequently Used) — evict infrequently used keys.

TTL (Time-to-Live) — automatically removes expired data.

For consistency models, you need to think about when your database changes. For instance, if your database data changes, your cache might temporarily serve old data.

You can use these strategies to solve that problem:

Use cache invalidation on writes.

Add short TTLs.

Use event-driven updates (e.g., publish DB changes to cache via Kafka).

While building a distributed cache, you might run into some challenges, and it’s good to share them with your interviewer.

Some Distributed Challenges:

Let’s explore some of the challenges and how to solve them:

1. Cache Stampede

This challenge happens when many requests hit a missing key simultaneously and all the request goes to the database at once.

Fixes:

Use request coalescing. Design your system in a way that only one request repopulates the cache.

Use the “lock & populate” mechanism with Redis locks.

2. Thundering Herd Problem

Next, let’s look at the thundering herd problems. Here, many keys expire at once, which leads to a sudden DB load spike.

To fix this, add randomized TTLs or soft TTLs to prevent all keys from expiring simultaneously.

await redis.set(cacheKey, data, { EX: 3600 + Math.random() * 300 }); Next, let’s elucidate how to scale a distributed cache.

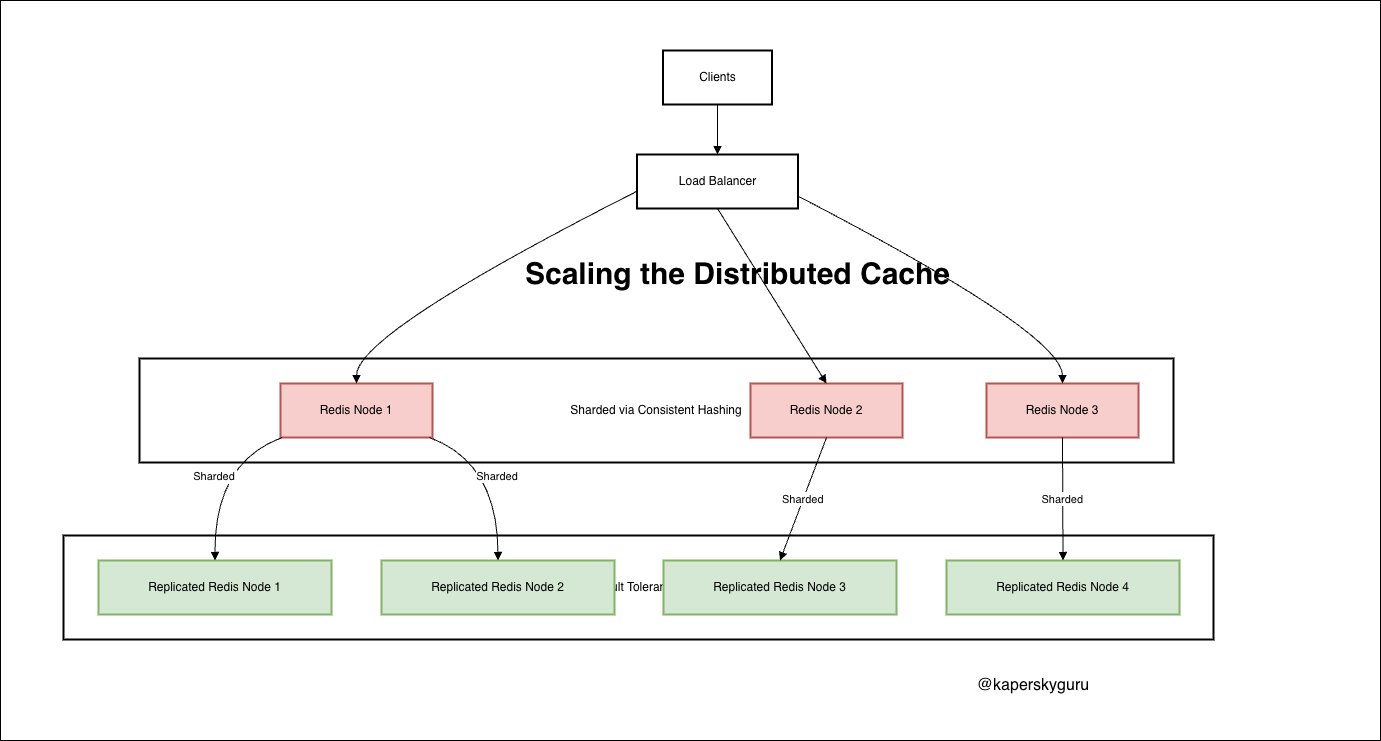

Scaling the Distributed Cache

Scaling a distributed cache is hard:

Let’s make it simple to help you in your interviews.

When your cache grows large, single-node Redis won’t cut it.

Here’s how to scale it properly:

Here are some of the techniques used to scale a distributed cache:

Sharding: Split keys across multiple servers.

Consistent Hashing: Avoid massive reshuffles when nodes are added/removed.

Replication: Use replicas for fault tolerance.

Multi-Region Caches: Deploy regional cache clusters close to users.

While scaling your distributed cache systems, here are some additions that will come in handy.

Fault Tolerance and Reliability

We need to consider what happens if our cache server fails. As with other production-ready systems, it should fail gracefully without interrupting the flow of our backend system.

Here are some best practices to build a fault-tolerant and reliable system:

Always have a DB fallback.

Set timeouts and circuit breakers around cache calls.

Monitor cache hit ratios and latency.

try {

const result = await redis.get(key);

if (result) return result;

} catch (error) {

logger.warn(”Cache unavailable, falling back to DB”);

return await db.query(key);

}Here’s a simple example that queries your database if something goes wrong with our cache server.

Additionally, we need to observe and monitor our cache servers like our main servers.

Observability and Metrics

Building a distributed system is not production-ready without observability. Here are some metrics you can track as you build your distributed cache system.

Cache hit ratio

Eviction count

Latency (p95, p99)

Connection pool usage

Replication lag

You can use tools such as Prometheus + Grafana or Datadog. This gives visibility into when and how your cache starts misbehaving.

Here are a few ideas to show advanced thinking:

Hot Key Detection: Track frequently requested keys and prefetch them.

Near Cache Pattern: Keep a local in-memory cache synced with the distributed cache.

Compression: Compress large cached values to save memory.

Lazy Expiration: Extend TTL if the key is actively accessed.

Final Answer

Here’s exactly how to put your answer forward to the interviewer:

“I’d design a distributed caching system using Redis with cache-aside pattern, LRU eviction, sharding via consistent hashing, and replication for fault tolerance. I’d add randomized TTLs and request coalescing to avoid cache stampedes, and expose metrics for observability. This design balances speed, consistency, and scalability for high-traffic systems.”

Designing a distributed caching system might sound simple — but it’s a deep dive into scalability, data consistency, distributed systems, and real-world resilience.

Every decision — from TTLs to hashing — impacts performance and reliability.

So next time an interviewer asks, “How would you design a distributed cache?”

Don’t just say “I’ll use Redis.”

Walk them through how you’d make it reliable, scalable, and production-grade.

I hope you learned something today: Spread the love. Share this newsletter with at least two of your friends today.

Also, let me know if you enjoy this new series and if you want me to continue breaking down interview questions like this.

Remember to start learning backend engineering from our courses:

Backend Engineering Resources

LAST WORD 👋

How am I doing?

I love hearing from readers, and I’m always looking for feedback. How am I doing with The Backend Weekly? Is there anything you’d like to see more or less of? Which aspects of the newsletter do you enjoy the most?

Hit reply and say hello - I’d love to hear from you!

Stay awesome,

Solomon (solomoneseme.com)

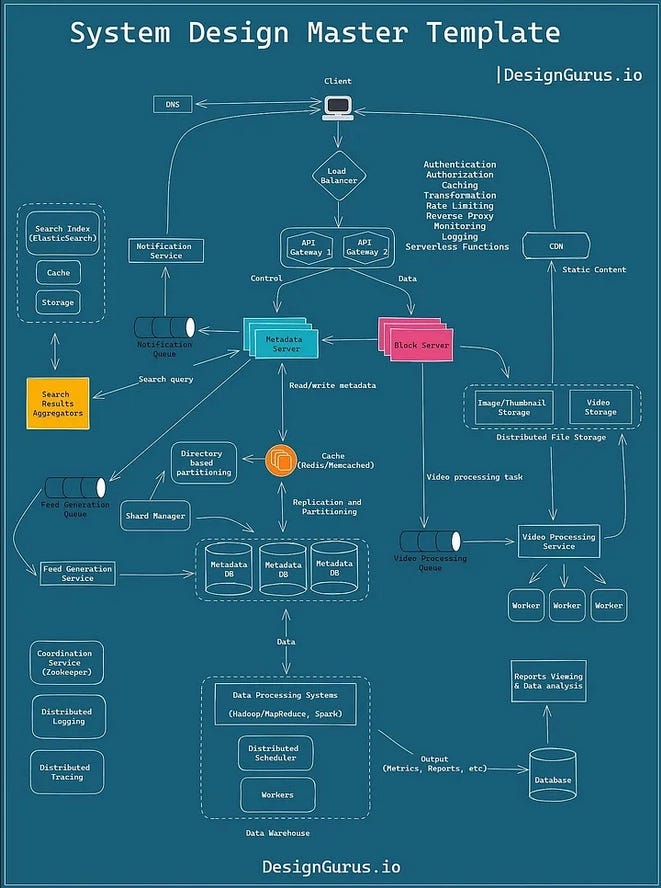

Also, here is a nice System design template from DesignGuru which you can use to answer any System design question on interviews. It highlights key software architecture components and allows you to express your knowledge well.

All the best for your System design interviews!!

Great article! 👏